To see the shape of the future, one must first understand the past.

For a couple of years now, I’ve been sporadically chipping away at a post about the telos1 of e/acc (effective accelerationism). But, I kept getting tripped up trying to recount the movement’s historical grounding before looking at where it is aiming - it was just too much background to cram into a single article. I finally realized that I should just separate the efforts; look back first and create a solid foundation, and then look forwards. Besides, as a dirty postrat2, I’m a believer in the importance of vibes and narrative. I’m also a fan of intentional myth-making, such as what Virgil did for Rome with his Aeneid, and what Tolkien attempted for England with his The Lord of the Rings. Though e/acc may largely be a materialistic movement led by shape-rotating progressive apes, I will nevertheless act as a little maker and attempt to memetically splinter the light into many hues that endlessly combine into living shapes that move from mind to mind.3 Thus, this attempt at a nonfictional mythopoesis, for:

I bow not yet before the Iron Crown,

nor cast my own small golden sceptre down.

-Tolkien, Mythopoeia

Perhaps the main challenge I face in documenting the emergence of e/acc is the tangled web of lineages from which it descends. It has as branching, complex, and wide-ranging an ancestry as any family tree. I am going to selectively highlight the parts of that tree that I find particularly interesting and relevant, drawing a strand from each to weave into a new, mythical (i.e., fundamentally human4) understanding of e/acc.

Not only will this mythos serve as a foundation for understanding the telos, but building it will allow me to serve as as good Lorite5, tending to the garden of our collective memory and apprehension of the thoughts and work of those upon whose shoulders we climb.

For, as the Teacher said some twenty-five centuries ago:

The thing that hath been, it is that which shall be; and that which is done is that which shall be done: and there is no new thing under the sun.

Is there any thing whereof it may be said, See, this is new? It hath been already of old time, which was before us.

-Ecclesiastes 1:9-10

Without further ado, I present the cast of mythical characters in roughly chronological order:

The Cosmists

The Jesuit

The Singularitarians

The Cypherpunks

κυβερνήτης (The Helmsman)

(The Doomsayer) נביא של גורל האבדו

The Codifier

The Paradoxical Presequentialists

The Poasters

Let us briefly examine each, before attempting to tie all of this together into a coherent whole.

The Cosmists

Let’s do ourselves the favor of not recounting all of Continental Philosophy,6 and instead, skip straight to Vladimir Vernadsky and Konstantin Tsiolkovsky. Both were members of a movement now known as Russian Cosmism (which included many others and was started by Nikolai Fyodorov). Tsiolkovsky is familiar to many as one of the founders of astronautics. They and their Cosmist contemporaries occupied a pivotal moment in history: in the immediate wake of Darwin, steeped in the first recognizable (to us) science fictions of Shelly and Wells,7 familiar with the works of Nietzsche and Freud, and imbued with an optimism for the future as they watched and participated in the creation of a new Europe built on humanistic principles. They were among the first to think seriously and rigorously about a future in which humanity spread beyond our earthly cradle. These two, in particular, planted many conceptual seeds that would, a hundred years later, flourish into e/acc.

Tsiolkovsky's writings are a fascinating rabbit hole where semi-surrealistic fictions in the vein of Verne merge with philosophical treatises, cosmological musings, and academic papers.8 Vernadsky popularized the term “biosphere” and constructed a tripartite model of successive evolutionary spheres: geosphere, biosphere, and noosphere. The noosphere9 is that world of reason/consciousness/memetics10 which has arisen as a new evolutionary layer from the biosphere (which itself was a new evolutionary layer which arose from the geosphere).

Not only did each sphere lead to the emergence and shape the evolution of the next sphere ‘up’ in the chain, but each sphere also reached back down, changing the one(s) that birthed it.

It’s giants standing on the shoulders of giants all the way down, from Beff11 all the way back to Theia.12

So the geosphere eventually created conditions which led to the emergence of the biosphere, which immediately began acting back on the geosphere. And then, the biosphere eventually begat the noosphere, which itself began altering the biosphere that supports it.13 Thus, there are complex dependencies within the holistic planetary system. Importantly for our purposes, though often overlooked, was Vernadsky’s idea that life was necessarily baked into the starting conditions of the universe and that it functions as a kind of anti-entropic force.14

Which nicely leads us to our next character.

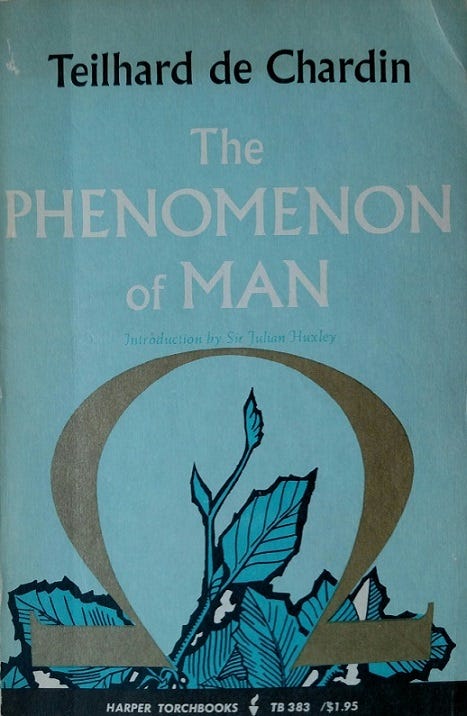

The Jesuit

I first learned about Teilhard de Chardin from the most literarily beautiful science fiction novel I have ever read, Dan Simmons’ Hyperion15. There is much I would say about this amazing novel and its successors were this a book review, but only so much is relevant.

First: not so explicitly but very memetically relevant is right there in the name and utterly littered throughout the novel (and the rest of the series). It is the mythological story of a changing of the guard. From the Titans to the Olympians. From Kronos to Zeus, and Hyperion to Helios.

There was one Titan who did not fight against the Olympians, though he later defied them and greatly suffered for it: Prometheus16. The Titanomachy and Prometheus’ rebellion are the story of progress, of improvement, of the universal telos (of which the current moment [and even all of human history] is but one, small snapshot).

Second, and more directly relevant is Simmons’ recapitulation of the historic Teilhard’s actual philosophy: that evolution itself is a teleological process arising only from the natural laws of the universe, resulting in a directional orientation. At the time of his writing (1930’s-50’s), he described everything as a part of this process: starting from the big bang to the evolution of planetary systems (geosphere), leading to the emergence of life (biosphere), which continued to develop until eventually producing consciousness (noosphere). He also envisioned the evolutionary process continuing into the (his) future. The creation of the internet was far in his future, yet it closely resembles a planetary intelligence, a development which he envisioned. The noosphere has expanded, as Teilhard foresaw, and it shows no sign of stopping. He predicted that an eventually coherent planetary intelligence would begin to spread beyond the planet until the noosphere encompassed the entire universe: the “Omega Point”. This would, in his theology17, be God becoming. This Omega Point God would then (and always have been) retrocausally ‘steer’ the evolutionary process towards its eventual self-actualization.

The Singularitarians

Similar in shape to the idea of the Omega Point is the idea of the “Singularity”. This term is a borrowing from astrophysics that is used as a metaphor18, and it’s worth a brief historical digression to understand both the idea and its history.

Modern computing19 arguably began in England during the Second World War in order to break German military cyphers encrypting communications. Specifically, at the beautiful English country estate known as Bletchly Park where luminaries such as Alan Turing toiled away while laying many of the foundations of Computer Science. Less famously than Turing, I.J. Good worked there alongside him.

Good, an avid and skilled chess player20, not only helped to break German codes, but he did early work in Bayesian statistics, developed key computing algorithms, and advanced computer engineering as well.21 I’m surprised that I haven’t heard more about him in rationalist circles. That’s cool and all, but it’s not why I’ve included him amongst The Singularitarians.

In Volume 6 of the journal Advances in Computers, Good published the article Speculations Concerning the First Ultraintelligent Machine which was based on talks he had given at conferences in 1962 and ‘3. It’s a pretty good read, and amazingly prescient when compared to state of the art ML/LLMs/AI, while anachronistic in other ways (e.g., imagining the need to use tiny radios to bridge large physical distances between circuits in order to achieve sufficient parallelization - remember, this is contemporaneous with the first production integrated circuits, and almost a decade before the first microprocessors). This article (rather, the talks it was developed from) is the origination of the concept of an intelligence explosion, foom, the AI singularity.

Here are two nice excerpts from the article that illustrate this:

It is more probable than not that, within the twentieth century, an ultraintelligent machine will be built and that it will be the last invention that man need make, since it will lead to an “intelligence explosion.” This will transform society in an unimaginable way. The first ultraintelligent machine will need to be ultraparallel, and is likely to be achieved with the help of a very large artificial neural net. The required high degree of connectivity might be attained with the help of microminiature radio transmitters and receivers. The machine will have a multimillion dollar computer and information-retrieval system under its direct control. The design of the machine will be partly suggested by analogy with several aspects of the human brain and intellect. In particular, the machine will have high linguistic ability and will be able to operate with the meanings of propositions, because to do so will lead to a necessary economy, just as it does in man.

p. 78

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind... Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control. It is curious that this point is made so seldom outside of science fiction. It is sometimes worthwhile to take science fiction seriously.

p. 33

Largely overlapping with Good in the timeline is another illustrious mathematician and computer scientist, one more famously known: John von Neumann.

Creator of cellular automata and originator of the idea to use self-replicating spacecraft to explore the galaxy (von Neumann probes), it’s clear that Johnny was long thinking about exponentials and their future impact. Which makes sense, given that most of his professional life was spent in the development of nuclear weapons from the late ‘30s until his untimely death in 1957. Heavily involved and influential in the Manhattan Project and the development of the first nuclear weapons, he continued after the war with Edward Teller and Stanislaw Ulam in the creation of the H-bomb, and then the ICBMs to deliver them. And, it seems that Johnny boy was the first to use the term “singularity” to describe a coming inflection point as the result of compounding acceleration. A small excerpt from Ulam’s Tribute to John von Neumann published in the Bulletin of the American Mathematical Society:

Quite aware that the criteria of value in mathematical work are, to some extent, purely aesthetic, he once expressed an apprehension that the values put on abstract scientific achievement in our present civilization might diminish: "The interests of humanity may change, the present curiosities in science may cease, and entirely different things may occupy the human mind in the future." One conversation centered on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

Next up is Vernor Vinge, famed scifi author, who combines Good’s “intelligence explosion” with von Neumann’s “singularity”. In January of 1983, Vinge was given the First Word editorial in OMNI, a science and scifi magazine. This is the first identified public usage of this terminology specifically relating to a coming intelligence explosion.

A decade later, he has significantly fleshed out the idea and is pretty clear-eyed about the risks, challenges, and likely changes to life, the universe, and everything. This can be found in his paper, The Coming Technological Singularity: How to Survive in the Post-Human Era.

And right around this time, the baton was passed to Ray Kurzweil, who began getting very specific in his predictions of the Singularity, and the concept escaped containment. A fascinating character and modern renaissance man, Kurzweil is most relevant here for his many talks and series of books that brought the idea of the Singularity out of nerdy obscurity and into the mainstream.

Roughly 60 years after the idea of an intelligence explosion was first promulgated, it is now taken seriously by serious people that it is upon us.

And so, with one foundational vision of the technological future dealt with, it’s time to examine another.

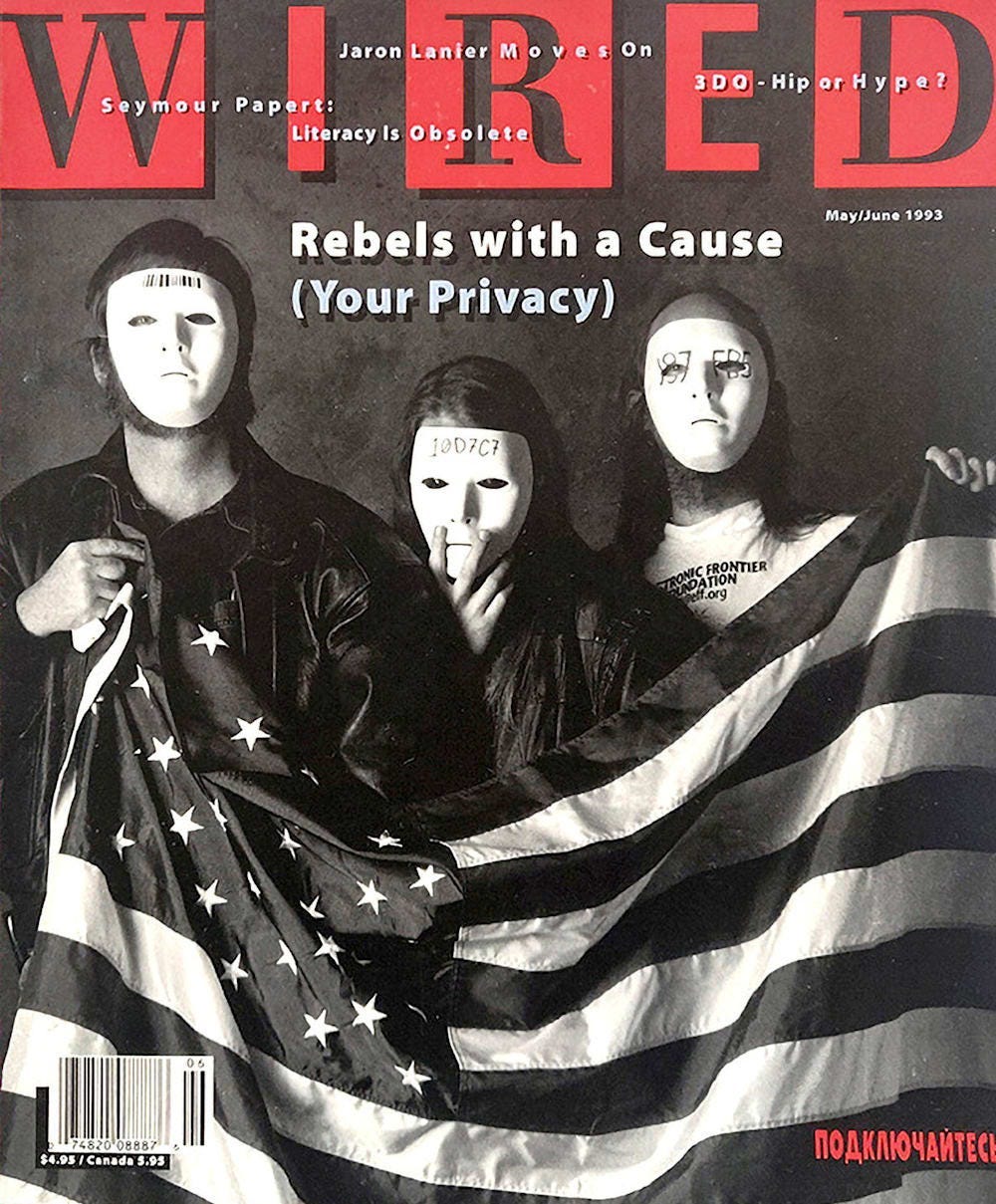

The Cypherpunks

Around the same time that Vinge and Kurzweil were talking about the Singularity, a separate group of tech visionaries was emerging. This group was less oriented on the ‘far’ future, and had counter-cultural roots in, hacking, anti-establishment libertariansim, pirate-radio, and political activism. They were gritty freedom-fighters waging an unseen war against the creeping technologically-enabled authoritarianism. They saw the foundations of the panopticon being built around them, and they worked to subvert the coming dystopia. They were punk.

It started much earlier than this, though. The earliest of the Singularitarians and their comrades laid the foundations for what would later become computer science. But developing the earliest electronic computers was not a terminal goal of their efforts, it was only ever instrumental. What mattered, for them, was winning The War. And their contributions to that effort were in the field of cryptography.

Allied governments went to great lengths in developing the field of cryptography (both to protect their own communications and to intercept and understand their enemies’) which paid off tremendously. As an added bonus, many of the nerds who led this charge, continued to be militarily useful after the fall of Berlin and the Cold War began heating up in various nuclear, space, and surveillance programs. Whether a home-grown talent or recently patriated kraut22, the transition from wartime academic to career member of the burgeoning US security state was often seamless.

And so, in 1954, the US government decreed that encryption (which was, at the time, almost exclusively being used and advanced by military and intelligence agencies) was not only dangerous, it was a controlled munition or military weapon, subject to the United States Munitions List.23 That’s right, even a book was deemed a legal munition, and mailing it overseas was legally equivalent to smuggling nuclear weapons to a foreign country. Even merely signing your email with pgp (if sent outside of the United States) was considered international arms trafficking.

The cypherpunks saw this as not only unconstitutional but a grave threat to privacy and the ordinary citizen’s ability to live freely on the web. It was evidence of a burgeoning totalitarian state’s overreach, and the pivotal battleground upon which hinged the entire future of digital freedom.

They recognized that the communications and computing tools that were being developed offered the promise of unprecedented boons to humanity. The information superhighway could one day allow anyone to directly interact with anyone else at the speed of light for low or even zero marginal cost, regardless of who or where they were. It could place the whole storehouse of human knowledge at the fingertips of everyone. It could facilitate the free exchange of ideas and information in an utterly precedented way. It could unlock entirely new levels of freedom and human flourishing. But, like any powerful tool, if could also be used by the dark side. It could allow pervasive tracking and persistent, global surveillance on a scale that not even Orwell could have imagined. The cypherpunks realized this and were determined to fight for a good future. Begun, the crypto wars had.

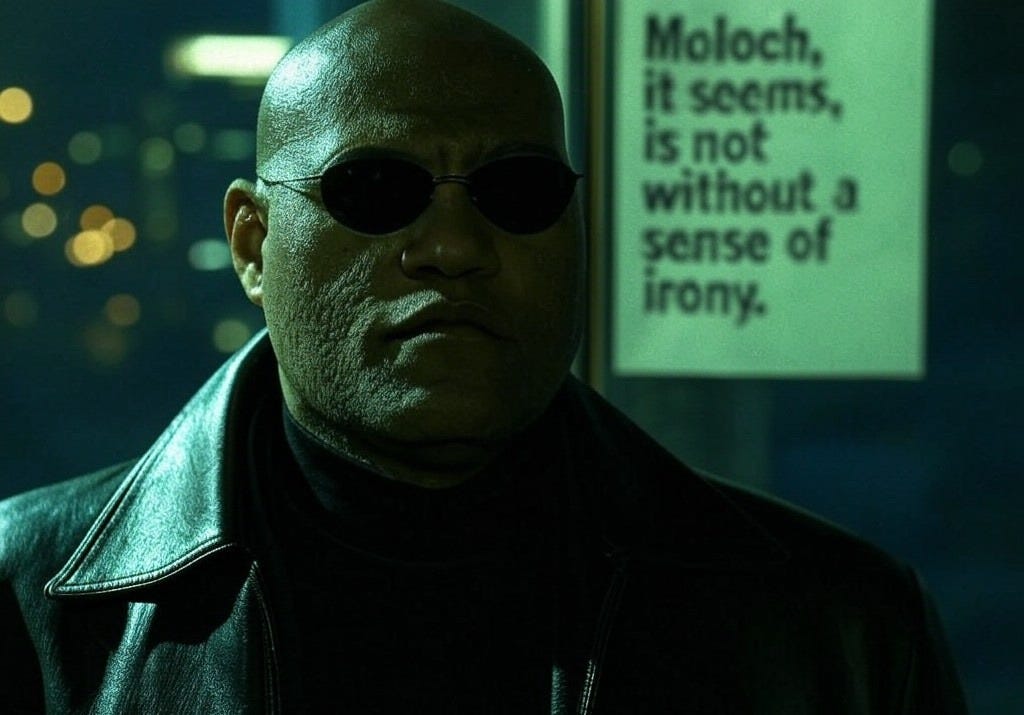

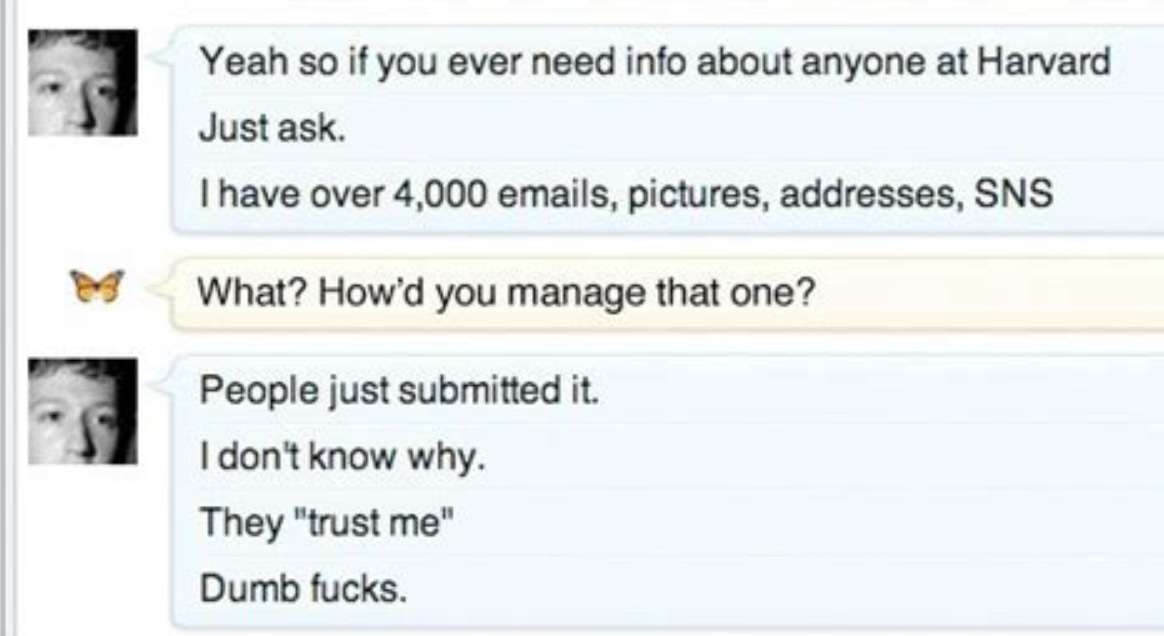

Even with occasional victories here and there, in the end, the cypherpunks lost. The leviathan state managed to fend off their scattered attacks for the most part, and absorb and redirect what losses were inflicted. Despite the proliferation and decriminalization of encryption, regardless of wikileaks and whistleblowers, the centralizing forces of Moloch managed to create, or infiltrate and subvert the companies and the (now entrenched) institutions that run online life.

To be clear, we are living in an even more pervasive and insidiously invisible surveillance dystopia than that which the cypherpunks feared. The panopticon has been created, and it is far more subtle and attractive than had been imagined. Not only is it a prison for our minds, but it is one to which we24 have actively submitted ourselves.

Although the cypherpunks largely failed in the end, many of them are still with us, still spreading the message of freedom, and hard at work building tools that could still help free us from this Molochian nightmare. The OG cypherpunks, those adjacent to them, and their descendants have given us basically everything that is awesome and freedom-maximizing in the technological world. FOSS, wikis, encryption, Bitcoin (and, for better or worse, all of “crypto”), Prospera, network states, Seasteading, BitTorrent, the Silk Road25, some of Neal Stephenson’s best works (Cryptonomicon, Snow Crash, The Diamond Age), the Electronic Frontier Foundation, the Free Software Foundation, GNU/Linux26, Tor, and more.

I’d condense and summarize the core philosophy of the cypherpunks like this: the more we can decentralize and distribute power, the better off we’ll all be.

The Helmsman

Any discussion of 21st century accelerationism must always revolve around the blinding, dizzying, and disorienting intellect of Nick Land. He is the fulcrum of accelerationist thought, against which all accelerationist thought reacts.

But first, a brief digression into etymology. What does cybernetic mean? We have some vague idea of the prefix “cyber-” meaning tech-stuff, internet-related, or something.27 But let’s plumb these depths a little deeper still. Cybernetics is a loanword from the ancient Greek: κυβερνητικός (kubernetikos28). Which means something like ‘good at steering’ or ‘skilled pilot’. I would suggest ‘self-regulating’ as a succinct definition. Let’s stick a pin in this etymological and linguistic tangent before we pick it up again, and move on to what cybernetics actually is.

Cybernetics was defined by Wiener as “the science of control and communication, in the animal and the machine”—in a word, as the art of steersmanship, and it is to this aspect that the book will be addressed. Co-ordination, regulation and control will be its themes, for these are of the greatest biological and practical interest.

Cybernetics offers the hope of providing effective methods for the study, and control, of systems that are intrinsically extremely complex. It will do this by first marking out what is achievable … and then providing generalised strategies, of demonstrable value, that can be used uniformly in a variety of special cases. In this way it offers the hope of providing the essential methods by which to attack the ills—psychological, social, economic—which at present are defeating us by their intrinsic complexity.

-W. Ross Ashby, An Introduction to Cybernetics

Here, I’ll nod towards the critical tradition and briefly acknowledge the milieu from which Landian cybernetic thought arose, and now move past it.29

Of the many faces of Land, his time with the Cybernetic Culture Research Unit (CCRU30) is the most relevant to this mythopoeia.

There are many papers and articles and books yet to be written on the various activities and thoughts of the CCRU. But this article is not the place for a meaningful recounting or analysis or synthesis of them. Instead, I’m going to pluck only three things from the CCRU/Landian primordial goop :

Egregores

The CCRU both created and battled egregores31. This was all done (presumably) for human posterity - posthumainty32. Though perhaps not actually true, as far as we are concerned, they created (in the modern, cybernetic, internet age) the very concept of an egregore. Not that egregores didn’t exist prior to the CCRU, for they have existed at least as long as language-enabled humanity has, but the CCRU identified many of them. And though I’m unaware of the CCRU using the term itself, they laid the conceptual groundwork that later communities would later build upon.

Hyperstition

This is perhaps the most memetically successful creation of the CCRU, as the term, if not a full understanding of the concept, has largely entered the mainstream (of the terminally online, at least). Hyperstition, to put it simply, it’s sort of like a self-fulfilling prophecy. It’s a meme that makes itself real. Or, to connect back to Vernadsky and de Chardin, it is the something that originated in the noosphere, reaching down/back into the bio-(and/or geo-)sphere to instantiate itself. For:A hyperstition is the λόγος33 inflicting itself upon the material world. This is similar to, though not the same as placebbalah (that is, kabbalah as placebo ritual magic, as described in Scott Alexander’s Unsong34) and nominative determinism.

Esoteric fun aside, the practical thrust of hyperstition is thus: ideas can make themselves real. Fiction can influence and shape reality. Visions of the future that are popular enough can self-realize. From the Star Trek tricorder to the iPhone/smartphone, from Gibson’s Neuromancer/Stephenson’s Snow Crash/Cline’s Ready Player One to our looming internet 3.0 (or, 🤮, metaverse35). Hyperstition has no particular aim or morality. ‘Good’ things can be hyperstitioned. ‘Bad’ things can be, as well. When we treat with the outside, we should be careful to manage our expectations of the results - for the outside is, by definition, inhuman.

The main point of this, though, is that things can (in fact) be hyperstitioned. Regardless of your ontology, the simple fact is that ideas can become reality. Even stronger, ideas often shape (even if only in the most banal sense) the future. And while the CCRU came up with these concepts internally, they were also shaping how future people would come to understand them. That is to say that they not only created the concept, but they steered all of the future in a certain direction by creating the concept. As a very minor example, this article would not exist without the CCRU having developed these ideas existence, and, at a minimum, would be a different article had they developed the ideas differently.

This is where hyperstition intersects with meme magick, for hyperstitions can be intentionally created with the aim of changing the future, and possibly the past as well.Accelerationism

There are many sources more eloquent and pithy than me that go into the meaning and history of the various ‘accelerationist’ movements. But here’s my take on the main thrust of all the many and variegated elements of accelerationist thought: things are moving in some particular direction - we would do well to move them along faster36.Now, to be clear, this is not how the CCRU (nor Nick Land himself) saw accelerationism. For them/him, accelerationism was kind of about communism (in the Marxian sense of transcending Capitalism via the inevitable process of historical materialism). But more than that, it was about rejecting humanity and embracing the inhumanity of the technocapital machine37.

Shortly after the works of the CCRU entered the wider memeplex of the internet, the idea of accelerationism underwent a precambrian-like ideological explosion, differentiating into a wide variety of forms. This is the advent of */acc, for there was accelerationism, and then right-accelerationism (r/acc), left-accelerationism (l/acc), unconditional accelerationism (u/acc), zero-accelerationism (z/acc), and many others.

In many ways, e/acc is a deterritiorialized take on */acc, accepting the etymological premise of accelerating processes that are underway, but mapping that concept onto an entirely new set of premises (of course, there was even further splintering in the immediate wake of e/acc: d/acc, bio/acc, λ/acc, ∞/acc, lunarpunk, cosmicpunk and more - some more serious than others). It has naively taken the language of accelerationism, uncaring of its heritage, and vibe-shifted it into new territory. What, exactly, e/acc maps onto (mythologically speaking) will become clearer as we progress further through this cast of characters.

The Doomsayer נביא של גורל האבדון

Back in the twilight of the world wide web’s golden age (before “mobile” finally killed off the last remnants of quality on the internet, that little which had somehow manage to survive the blitz to monetize the Eternal September’s freshmen through advertising), I stumbled across the super-erudite and intimidating forum Less Wrong (LW)38. As was the case for many others, this was my introduction to the Bay Area/online rationalist39 movement.

Love him or hate him, there is no AI or rationalist discourse that hasn’t been influenced by the OG AI Doomer, Eliezer Yudkowsky. He’s been sounding the alarm since long before most people even recognized that there was anything to have an alarm for. As much as I’ve ended up in a very different place than Yud regarding AI x-risk40, I have to praise him. Like most others who even know what I’m talking about, his work has deeply shaped me and provided the framework for my practice of rationality. Although Eliezer will decry likely this paper, should it even ever cross his vision, I’d like to properly acknowledge him. Any of the new, young e/acc’s who are in it for the vibes probably have no idea of the debt they owe him. There is no e/acc without Eliezer (and I can just hear him scream in Oedipal anguish upon reading this line).

Just as there would never have been a Hegel without Kant (who would have been opposed to him) nor a Marx without Hegel (who would have opposed him), there never would have been a Beff without Yud. For Eliezer created the rationalist community that became Less Wrong. And that community (and its eventual extensions) made, influenced, or extensively interacted with virtually every major AI personality today. With a bit more genealogy that we’ll get to in the next sections, Yudkowsky’s influence led to the AI Safety movement.

The Codifier

After LW came Slate Star Codex, a blog written by Scott Alexander which hosted perhaps the best comment section the internet has ever seen. Throughout the twenty-teens, Scott and his blog wielded immense influence over the rationalist and Bay Area tech communities, driving discourse and shaping the minds that would go on to shape AI, tech ventures, eventually US politics, and thus, the world (as we are now seeing in early 2025).

There’s so much to be said about Scott and SSC/ACX41, and many of his articles remain among the most thoughtful and thought-provoking things I’ve ever read. But, for this mythopoeia, there are two things that stand out.

Of these two we have arguably his best and most enduringly influential article. In it, Scott identifies and examines the nature of perhaps the most pernicious egregore to have afflicted humanity from time immemorial:

MOLOCH

Meditations on Moloch is a massive essay. Scott methodically examines Moloch, using the stanzas of Allen Ginsberg’s famous poem to test various hypotheses and better understand its nature. You won’t really, fully grok the full extent of how insidiously pervasive Moloch is without actually taking the time to understand it (such as by reading Scott’s essay), but here’s a couple of excepts to try and get to the core of things:

There’s a passage in the Principia Discordia where Malaclypse complains to the Goddess about the evils of human society. “Everyone is hurting each other, the planet is rampant with injustices, whole societies plunder groups of their own people, mothers imprison sons, children perish while brothers war.”

The Goddess answers: “What is the matter with that, if it’s what you want to do?”

Malaclypse: “But nobody wants it! Everybody hates it!”

Goddess: “Oh. Well, then stop.”

The implicit question is – if everyone hates the current system, who perpetuates it? And Ginsberg answers: “Moloch”.

The question everyone has after reading Ginsberg is: what is Moloch?

My answer is: Moloch is exactly what the history books say he is. He is the god of child sacrifice, the fiery furnace into which you can toss your babies in exchange for victory in war.

He always and everywhere offers the same deal: throw what you love most into the flames, and I can grant you power.

Moloch doesn’t literally exist, but serves as a useful category or egregore. Once you’ve built the category of Moloch in your mind, you’ll start to see his impact and effects all over the place. You’ll see Moloch’s hand in game theoretic defectors, the tragedy of the commons, races to the bottom, enshittification, bureaucratic cruft, administrative bloat, soaring national debt, government and corporate surveillance, and on and on. Moloch is the enemy. If we end up destroying ourselves by means of AI or other technology, it will have been Moloch pushing us to do so.

Scott’s second main relevancy is his positioning and role in the development of the broader rationalist community.

As mentioned in the last section, Yud founded the rationalist community and gave it its first home in LW.42 Several years later, Scott launched SSC and there was now a second home. One with a different format and vibe. And though probably unintentional, this was the start of the first major schism in the rationalist community. Not everyone from LW visited SSC. And not all of the new people who found SSC made their way over to LW.

Who are the “postrationalists”? Well, that’s a complicated question, but the short answer is that they are those who went through the rationalist/LW journey but found themselves wanting something more. Some went the way of woo, others delved into various schools of philosophy, and others still applied the methods of rationality to the messy reality of human relationships. This illegible mess of a nonetheless semi-coherent group coalesced into what became known as tpot43.

The Paradoxical Presequentialists

As a group, rationalists try to think from first principles, entertain outlandish or distasteful ideas for at least the sake of argument, and often willingly bite the bullet and accept unconventional conclusions when they are convinced of an argument’s soundness44.

The growing community of rationalists contained a disproportionate share of high intelligence, high agency, people. It also tended to attract highly educated people who were going through their academic careers. It wasn’t narcissism for many of these to realize that the decisions they made regarding what to study and then what professional fields and roles to pursue would have outsized impacts on the world.

Given all this, it should be utterly unsurprising that even quite early on, many rationalists turned their attention to philosophy (though often with disdain) and ethics in an attempt to discern how they ought to spend their limited resources (time, attention, professional effort, money, etc.).

It seems that the sort of people who gravitate towards rationalism also are attracted to consequentialism45 as an ethical framework. Rationalists were already learning how to calculate their way through life using Bayes’ Theorem, making their beliefs pay rent and so on; utilitarianism would not only allow, but demand taking a similar approach to ethical questions.

And so, the rats discovered Peter Singer, just the right kind of bite-the-bullet, hard-nosed utilitarian philosopher with a shining pedigree. Influenced by Singer, questions of animal welfare, euthanasia vs. life extension, and extremely diligent util tradeoff calculations became ever more prevalent among LW ethical discussions. Emblematic of the kind of earnest yet dogged approach to calculating the ethically correct action is Singer’s famous thought experiment of the drowning child. It is intended to highlight a flaw in the cognitive heuristic that our ethical intuitions rely on - exactly the kind of insight that rationalism was founded on.

Offline, there was a second center of rationalist gravity besides the Bay Area: the University of Oxford. In the late aughts and through the teens, this is where the serious rat philosophy was being done, in two different (though connected) realms: rationalist ethics and existential risk. Some amazing people, all there at the same time, creating various organizations, and tackling the big problems from various directions.

Rational Ethics

Along with Peter Singer’s influence, William MacAskill and Toby Ord of Oxford were probably the most active drivers within academic philosophy of what came to be known as Effective Altruism (EA). If you’re a rationalist, you apply the methods of rationality to ethics, some flavor of stictly-held utilitarianism is likely to be the result.

EAs like to use QALYs46 in calculating maximum utility. This metric allowed them to literally calculate the best or most efficient allocation of scarce resources. In the first decade or so of EA, this is where the community really shone. Organizations like Give Well and Open Philanthropy did assessments and reports of various different charitable organizations and possible philanthropic interventions, scoring them, allowing those who wanted their altruistic actions to be effective to select the best bang-for-the-buck charitable giving. Organizations like 80,000 Hours focused on helping (mostly young) people find those careers and employers that would enable them to ensure their professional lives were being similarly effective in creating a better world.Existential Risk

Along with Eliezer, Nick Bostrom is probably the person who has thought most deeply and rigorously about superintelligence and its likely implications. I highly recommend reading his book Superintelligence: Paths, Dangers, Strategies if you want to understand more about AI hype - whether that it will create a post-singularity scifi utopia or kill us all.

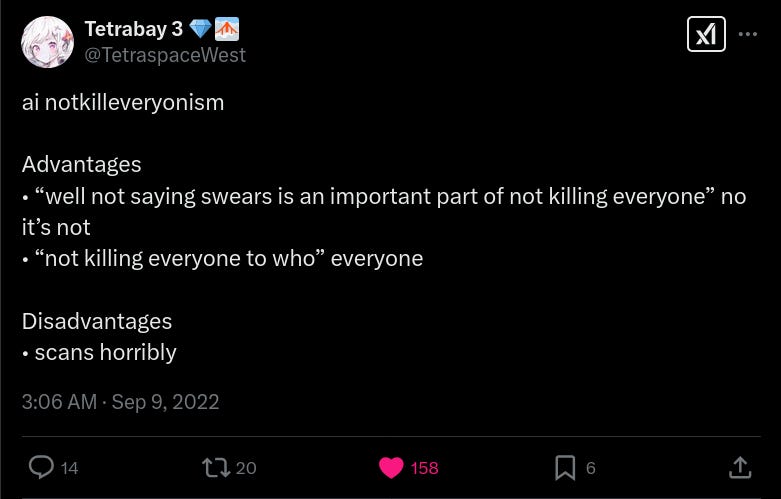

But that’s the one sentence implication of his work, and the explanation for Yudkowsky’s dread. If there’s even a 1/100 chance that AI will kill us all rather than usher in scifi heaven, we should be extremely worried and cautious. After all, there’s no coming back from extinction to learn our lesson and try again. I suspect that from the beginning, Yud’s hope for the rat community was that it would battle against the (then) future existential threat of AI. A whole ecosystem of EA/rat organizations focused on AI safety/control/alignment/notkilleveryoneism47 sprouted up and heavily influence AI companies and governments around the world to this day.

Now what do you suppose might happen when you put these two areas of EA together? If AI has even a small chance of wiping out humanity (and most EAs believe it has a moderate-extremely high chance of doing that or worse), then any action taken to avoid this outcome becomes not only ethically acceptable, but obligated. And any action not taken towards preventing this outcome is morally equivalent to bringing it about. Predictably, the EA movement became more and more focused on AI x-risk to the growing exclusion of other, earlier areas of major EA concern (animal welfare in factory farming, mosquito nets, vaccinations, etc.). After all, every dollar and minute not spent fighting against AI Doom might as well be a dollar or minute spent promoting it. It’s like a morally inverse Roko’s Basilisk48.

And then, in November of 2022 SBF blew up FTX, seriously damaging EAs wider reputation as self-sacrificing ethical paragons who stood in a position of moral authority.49 And so EA largely retreated from the spotlight and persisted in their AI safety roles and organizations.

Oh, and about those roles and organizations. Per Bostrom, a multipolar superintelligence world is almost necessarily better (for us) than a unipolar superintelligence world. So rats, EAs, and their adjacent fellow billionaire travelers got together to form a new AI lab that would be nonprofit and open source, working towards the benefit of all humanity - not just one of the worlds largest corporations. “Open”AI. And EAs founded and staffed a bunch of AI Safety-focused research and advocacy organizations. And they (bring shepherded towards AI Safety careers by way of Computer Science/ML PhDs from EA organizations like 80k Hours) began to permeate every major AI outfit. Heck, when “Open”AI wasn’t EA/AI-Safety oriented enough, a bunch of the left and founded Anthropic to make safe AI. And then Ilya left to start Safe Superintelligence, Inc. And a bunch of other people to a bunch of other new “safe” AI startups in between and since.

The upshot is that basically the entire SOTA AI industry is staffed and run by EAs trying to prevent an unsafe closed/unipolar fast AI takeoff. And yet somehow, they are the ones that are daily inching us closer and closer to exactly that worst of all possible worlds.

After all, it was EAs penetration into tech and AI as well as their their growing p(doom|AI) extremism that prompted a reaction.

The Poasters

Tired of the direction things were going in the tech sphere, defeatism, nihilism, and calls for new authoritarian government interventions, a few grizzled veterans of the meme wars, higher academia, and startup culture decided to stand against the prevailing winds. They clawed their way out of the tech mines up into fresh air, where they claimed a spirit of invigoration, determination, and optimism to share with the world. Rather than laying down to gently go into that good night, they chose to follow in the footsteps of Prometheus and Rage! Rage against the dying of the light.

They found each other in/around tpot, and something special was born. Users creatine_cycle, zestular, bayeslord, and Beff Jezos came together and hashed out the starting core concept of Effective Accelerationism.

This core group put together a few explainer articles early on, they can all be found on the

. In addition to these, there have been many groupchats and spaces on twitter where the e/acc community ‘lives’. But mostly, it’s just people who have been inspired by the message of techno-optimism deciding to lock in and build, wherever they may be in life, doing whatever they do best.Conclusion

There are many other people, ideas, and movements that played historical roles in the eventual emergence of e/acc. But these nine mythological figures are the golden threads of the past that when weaved together in the present show a path leading into the distant future. A Golden Path. One in which Moloch is defeated (or at least diminished), humanity and our descendants flourish, and intelligence and the light of consciousness are spread throughout the universe securing as much of our present light cone for our posterity as possible. One which threads all the needles, avoiding the many possibly dystopian failure modes, from extinction to slavery to totalitarian surveillance states.

This is the future that e/acc seeks to protect and create, the birthright we wish to bestow on our descendants. Into the singularity and beyond, opening the cosmos for any and all to freely explore, evolve, excel, and enlighten. The stars are our destiny, we need only reach out and take them.

The stars await us…

From the Greek, τέλος. Without getting into a whole treatise on the four Aristotelian Causes, a telos is some thing’s ultimate purpose or endpoint. It is the goal towards which something reaches. Something having a telos could imply intent, it could suggest design, it could impute retrocausality. It need not do any of these things.

Consider Conway’s Game of Life. A system with a simple ruleset and a starting state will deterministically lead to some outcome. Whether that outcome is static, or repeating, or infinitely random (such as with irrational numbers), it is deterministically set by the initial conditions (rules and state). Computational irreduciblity (as well as Gödel and Church-Turing) tells us that we can’t always know the end result of (even) a deterministic process in a complex system before it plays out.

Thus, while “telos” often connotes the supernatural, and I am leaning on that reading for the vibes, it technically need not, which I am leaning on for correctness.

If, in the unlikely event that you are reading this and unfamiliar with the online/Silicon Valley rationalist movement, let me point you towards an ancient post that attempted to lay it out for those who were definitely not a part of it. Even those who are familiar may find the thread interesting (as I did, just now, rereading it) as it is a baby rat attempting to explain rationalism to a very different audience, and their reaction to it. “Rat” is shorthand for a rationalist.

For my fellow pedants: Yes, I agree that the words “rationalist” and “rationalism” are massively overburdened, but I didn’t choose the terms that stuck, and here we are. Anyways, chances are that anyone reading this is a rationalist, post-rationalist, or rationalist-adjacent, and you probably most associate these terms with the relevant movement.

As for the question, “what is a postrat?”, well, I elaborate a bit in the The Codifier section. But, in short, it is someone who went through the gauntlet of the rationalist scene and emerged out from some other side of it. There are many different kinds of postrats, but they share a rat pedigree.

Yes, much of this sentence is shamelessly cribbed from the very poem in the following block quote, Tolkien’s Mythopoeia.

For we humans understand the world through narrative.

There are plenty of articles that I could link here about sense-making, meaningness, and narrative to support this statement. Ultimately though, it is an assertion, and one that seems to be unverifiable empirically (for the time being, at least). So, rather than flooding you with yet more links to papers in these extensive footnotes, I’ll simply ask you, dear Reader, to accept this statement as an axiom and come along for the ride.

This term, and the following screenshot quote both come from Neal Stephenson’s amazing work of speculative fiction Anathem. It is my all-time favorite book.

Like virtually all of modern philosophical thought, we could trace a direct line back to Kant (and, of course, from there back all the way to the thinkers of the Axial Age). Given the ‘accelerationism’ label, we must, of course, contend with Land, which implies a lineage descending from Kant and then through the branchings of the dialectic, Post-Marxism, semiotics, Critical Theory, and postmodernism/poststructuralism. All this with a special emphasis on thinkers such as Deleuze & Guattari, Baudrillard, Freud, Nietzsche, Lacan, Lyotard, and many others.

However, it is important to realize that despite being indebted to this tradition genealogically, the e/acc milieu actively rejects much of it. Given that, I will not be extensively referring to or building upon this strand of lineage nor relying upon the critical theory terms of art such as “deterritorialization”, “machinic desire”, “hyperreality”, “rhizome”, and so on. Of course, in the section on Land, I will necessarily use some of this terminology.

Of course there is intense debate and gatekeeping about the origin of science fiction. I do not intend to wade into these tumultuous waters. Instead, I point out the emergence of what most of us today would recognize as some form of scifi (Shelly’s Frankenstein 1818, Verne’s Journey to the Center of the Earth 1864, or Well’s The Time Machine 1895) throughout the nineteenth century demonstrates a sweeping vibe-shift that the Cosmists were very much a part of.

This will become a recurring theme with many of our characters, the blend of rigorous thought with some combination of fiction, the occult, and/or the esoteric. There is a strain of the cracked schizo-autist that runs deep in this cast. Those that were too early would have fit in nicely with tpot if only they lived today.

Specifically referencing Dawkins’ memetics here.

Beff will get his introduction in due time.

Theia was a Titan from Greek mythology, the mother of Selene (the moon goddess), and sister-husband to Hyperion. Because of her relationship to Selene, the planet that is hypothesized to have collided with Earth some 4.5 billion years ago leaving remnants/ejecta which then formed the moon, is colloquially named Theia. Incidentally, Hyperion is the title of a fantastic scifi novel which becomes relevant in the next section.

This recursive and fractal type of cybernetic system is a wonderful way of interpreting and understanding humanity’s impact on our planet. Climactic change is, of course, the steady state of climate. Totally unrelated to the rest of this article, I’d argue that this is the proper frame in which to view climate change. That is, climate change as a worry is entirely anthropocentric. Or, climate change should only seen as ‘bad’ by humans in as much as it negatively impacts us. For, is there some '“natural” or “pure” moment in planetary history that should be preserved as in amber throughout deep time? Obviously not. Why should this anthropocenic moment of geological history be privileged as the one, true moment worthy of ecological conservation? Imagine nautiloids trying to pause climactic conditions in the Late Cambrian - we’d never have arrived, let alone be able to bootstrap that which comes next.

n.b. This is a naïve usage of the word “entropy” that calls to mind the idea of the heat death of the universe and the resulting seeming paradox of evolutionary processes working against the second law of thermodynamics. Entropy, as envisaged by Jeremy England, and the founders of e/acc, does not find life (as a result of evolutionary processes) as running counter to the tendency of entropy to increase over time. In fact, they find that more evolved systems are in fact more effective at capturing free energy which is then dissipated as heat i.e., they increase entropy with high efficiency. Evolutionary forces (whether solar, biological, or memetic) are basically the universe performing stochastic gradient descent to find (produce) entropy maximization.

All of this is to say that despite Vernadsky posing the ‘element’ of life as counter to entropy, and thus seemingly in contradiction to England et al., his understanding of the natural forces leading inexorably to evolutionary processes creating life is very much in line with theirs. The difference is merely terminological.

I’ve been going on about this book and the series for years. But it wasn’t till writing this article that I found an amazing Masters thesis all about Hyperion and its sequel The Fall of Hyperion. This thesis hits all of the points that I love to make, and many more that I never did, being uneducated about poetry and music and other things beside. I haven’t been able to track this guy down on Xitter yet, but he is def one of us. Here’s a little nod to you, Prof Steiner: may your work act as lights that will keep guiding us on our path to Omega.

Give this thesis a read (especially if you’ve read Hyperion), it might restore your faith in (some of) the humanities:

Philip Steiner’s “Ennobling SF: Intertextuality, Metafiction and Philosophical Discourse in Dan Simmons’ Hyperion and The Fall of Hyperion”

Yes, there is a strong Promethean vein running through e/acc. Literary critics would likely be quick to bring up other Greek mythology, such as the legends of Pandora or Icarus. But let us not gloss either of these legends as a quick gotcha rejoinder. For at the bottom of Pandora’s Jar was hope. And though he flew too high, Icarus (and Daedalus) were escaping King Minos’ imprisonment. Though the way be treacherous, is it not better to try and fail than to never try at all? Especially when the failing is not necessarily existentially catastrophic, but never trying at all is (more on this later). Instead of being forever petrified by the fear of failure, we should learn to fail better.

Though pretty unconventional in his theology, with the avocation of paleontologist and evolutionary theorist, Teilhard was a devout Catholic and Jesuit priest.

Unfortunately, it’s a poor borrowing, in my opinion. Though less snappy, “event horizon” should have been the borrowed term. Regardless, the concept of a technological singularity is fundamental to e/acc, and we need not get too caught up in terminological quibbles.

“Modern” in the sense that the word no longer labels an occupational role that humans fill, but describes instead the mathematical activity that a machine undertakes.

After being introduced to the East Asian game of Go by Turing, Good later popularized it to the Western World. See this 1965 article from New Scientist in which he lays out the basic rules, some typical strategies, and why the game is so much more interesting than chess.

For much more on Jack Good and his work during and since the war, check out Sandy Zabell’s fantastic paper The Secret Life of I. J. Good. Be prepared to follow along with Bayesian statistics formulae in as applied to cryptology.

Everybody’s heard of Operation Paperclip, in which the US patriated ~1600 Germans at the end of the war, primarily for use in rocketry. Less known is that there were a number of similar programs but with various foci in terms of field or technological specialty. For example, TICOM and Operation Stella Polaris were two operations specifically focused on capturing cryptologic/signals intelligence personnel and materiel.

The USML was codified in USC Title 22 in November of 1954. The USML defined categories of “munitions” that were to be export controlled. Category XI—Miscellaneous Articles contains a number of items from RADAR to body armor to tear gas. Item (j) reads, “Cryptographic devices (encoding and decoding).” But it’s the next bit that’s the real kicker:

”CATEGORY XIII—TECHNICAL DATA

Technical data relating to the articles here designated as arms, ammunition, and implements of war except unclassified technical data generally available in published form.”

Software came to count as such. This is how cryptographic software eventually got caught up in ITAR.

“We” the collective, “we” the society that currently exists. Perhaps, even, “we” as the dominant mass psychology of humanity. Of course, many of us have resisted over the years, but this resistance is not even futile given the pliability of the masses. One need only look to what is currently happening with “rednote” (oh, universe! spare me from the unironic irony of human stupidity! 小红书, Little red book. How the masses thirst for totalitarianism) to observe the obsequious drive towards docile slavishness.

So glad to see that Ross has finally been freed!

Cyberspace. A consensual hallucination experienced daily by billions of legitimate operators, in every nation, by children being taught mathematical concepts... A graphic representation of data abstracted from the banks of every computer in the human system. Unthinkable complexity. Lines of light ranged in the nonspace of the mind, clusters and constellations of data. Like city lights, receding.

-William Gibson, Neuromancer

The more tech-inclined amongst you might find this terminology similar - Kubernetes (κυβερνήτης) literally means ‘helmsman’ or, in more modern parlance, pilot (think maritime, not aviation - that’d be closer to navigator, though the roles don’t really map one-to-one). You may recognize this from the software deployment automation system, Kubernetes.

Allow me to slightly belabor the point of footnote 4:

One of the major criticisms laid at the feet of recent */acc movements (and e/acc in particular) is an ignorance of the critical/philosophical milieu from which they spring. This style of criticism is both insightful and yet also entirely misses the point. Whether or not Marc Andreesson has read Anti-Oedipus or Nomadology or is familiar with D&G’s conception of the Techno-Capital Machine in terms of the desiring-machine or the War Machine is utterly irrelevant.

What has been attempted by the e/acc movement so far is not in anyway part of the Continental or post-Marxian traditions. Yes, this is arguably an example of hyperreality or the map consuming the territory. And yet it is also, in the sense of Girardian mimetics, a deterritorialization of terms and concepts. Most ‘educated’ e/accs know of Land. I imagine that few would know of D&G or Baudrillard or Girard. So what? Land, and the CCRU, hyperstitioned all sorts of egregores into the collective memetic unconscious. Though we pedants might decry and resist it, neither language nor culture are particularly amenable to prescription. In the same way that we may carry a genetic lineage that implicates all of Eukaryota (but we humans give no fucks), the e/acc memeplex implicates much or all of Continental Philosophy (but gives no fucks).

There is so much of interest to say about the CCRU, but most of their work is even waaaay more schizo than I’m trying to be. If you haven’t delved this particular chasm yet, and have a decent level of resistance to the abyss, then I urge you to dive in. The CCRU, in brief, was an artistic-philosophical group of orthogonal-cultural pioneers. They blended aesthetic, mystic, esoteric, philosophic, ecstatic, and academic praxes through the lens of cybernetics. It’s wild stuff.

Should you feel up to the challenge (this is full-on memetic hazard territory) and have your man-pants at hand, you can find the writings of the CCRU on a variety of archived blogs, and many of their works conveniently collected here. Or you could watch an audio/visual production of a reading of one of Land’s works like this.*

*If only I could footnote my footnotes… the semiotically inclined amongst you will notice the semantic flailing at “here” and “this”, as if the words themselves actually pointed to anything absent the hyperlinks… Forgive me, Saussure

So, if you haven’t already picked it up, things get weird with the CCRU. Egregores are what Land would now call hyperagents, or what the LW community describes thusly:

Adapted by the rationalists from an older esoteric term for a spirit made of a group's thoughts, an egregore is a collective (of persons) that seems to act like a being in its own right, like "America" or "Microsoft", although one need not be legally recognized to qualify. They often don't act in the best interest of individual persons, including their own members, analogous to predators or parasites.

It's unclear to what extent these entities can be called "minds" (or "conscious"), but your own mind is also made up of a collective (of neurons). "In truth, there are only atoms and the void," and yet we give names to higher-order structures, like individuals or countries.

Interestingly, both corporations and capitalist economies are not only egregores, but artificial intelligences in the Landian sense.

In the midst of the intractable culture wars and the resultant de/reterritorialization of words, it can be worthwhile to take etymological detours in order to remember what various terms meant all of, like, five minutes ago. Let’s take a moment to consider the word “transhuman”; a word that has become something of a slur recently.

In the deep, dark beforetimes of the 90’s internet there were various post-human movements. Organizations such as The Millennial Project and the Living Universe Foundation (which has apparently been resurrected - it was once a thriving online forum, then disappeared, but there is a website once again). Even further back, the futurists tended to be architects like Frank Lloyd Wright (see Taliesin - something that also features prominently in Simmons’ Hyperion Cantos) and Paolo Soleri. Paolo created the concept of the arcology and the most beautiful and impressive book I’ve ever owned [and I’m a damn book nerd], Arcology: The City in the Image of Man:

He also founded an experimental architectural community in the Arizonan desert (like Wright’s Taliesin West) named Arcosanti. If you’ve ever consumed scifi content that includes arcologies, hive cities, or the like (e.g., Judge Dredd, WH40K, the Dark Future novels, or anything similar), you have benefited from the fantastic conceptual derivatives of this school.

Forward-thinking people like those above, especially those in the early internet days (buttressed by forward-thinking science fiction like Snow Crash, Neuromancer, and many others) imagined a future in which humanity transcends its limitations. A posthuman* future.

*(Footnote to a footnote: Yes, I’m once again sliding past the heritage of critical theory, intentionally and with full self-awareness. This is not due to any ideological prejudice, but only practicality. Despite the theorists’ [left’s] claims of ownership over terms and concepts [e.g., anarchism], language doesn’t actually belong to any particular prescriptivist ideological school. And while I recognize the theorists’ claims to “posthuman”’s heritage, I am not operating within the strictures of that dogma, and am therefore free to use the word as it has found traction in the language, despite the tortured cries of linguistic ownership that might oppose me)

Back to the point about posthumanity:

This earlier, golden age of the internet, inspired by such architectural and authorial futurists envisioned a future in which humans had become more than human. A future in which there was a flowering of the essence of humanity into a rich and diverse multitude of posthumans. Posthumans who were adapted to a wide variety of planetary environments, from extremely high to extremely low gravity, those who could fly, those who were aquatic, perhaps even some (as with species of Ousters from Simmons’ Hyperion Cantos) who were adapted to living in space itself. “Post” in the sense that humanity had freed itself from the tyranny of blind, biological evolution. “Post” in the sense that these humans were free to redesign themselves for any environment, whether terrestrial, spatial, or even digital.

In this conception, posthumanity is humanity reaching its true potential and logical conclusion of not only mastering the world around them but themselves at a fundamental physical level. It is the teleological endpoint of humanity. More human than we current humans, held back involuntarily by our animal natures as we are. Given all this, we can begin to understand what “trans”human meant. If we are currently human, and we will one day be posthuman, then there must be some transitionary state. Transhuman was only ever meant to be a step on the road to posthumanity. But somewhere in the early ‘teens, the terminology leaked out into normie-space, and became a boogie man that represented Sorros/Gates-style demonic manipulation of humans into wire-heading cyborgs.

Also, ( and I would hope this is obvious, but then, current-moment issues consume everything these days)

“trans”human has nothing to do with the 2020’s “trans” (as in, transsexual or transgender) movement. Is there conceptual overlap? Of course! A posthuman future entails the ability for individuals to change their outward characteristics to match either traditional gender. Of course, it also entails a lot more! I, for one, wish (trans)gender discourse would take notes from Greg Egan’s Distress:

Gina nudged me gently and directed my attention to a group of people north, on the opposite side of the street. When they’d passed she said “Were they…?”

“What? Asex? I think so.”

“I’m never sure. There are naturals who look no different.”

“But that’s the whole point. You can never be sure - but why did we ever think we could discover anything that mattered about a stranger, at a glance?”

Asex was really nothing but an umbrella term for a broad group of philosophies, styles of dress, cosmetic-surgical changes, and deep-biological alterations. The only thing that one asex person necessarily had in common with another was the view that vis gender parameters (neural, endocrine, chromosomal and genital) were the business of no one but verself, usually (but not always) vis lovers, probably vis doctor, and sometimes a few close friends. What a person actually did in response to that attitude could range from as little as ticking the “A” box on a census form, to choosing an asex name, to breast or body-hair reduction, voice-timbre adjustment, facial resculpting, enpouchment (surgery to render the male genitals retractable), all the way to full physical and/or neural asexuality, hermaphroditism, or exoticism.

He goes on in less quotable blocks to describe a transhuman spectrum of gender that goes something like: ufem - enfem - ifem - asex - imale - enmale - umale

“No woman can speak on behalf of all women, as far as I’m concerned. But I don’t feel obligated to sculpted ufem or ifem to make that point!”

“Well… exactly. I feel the same way. Whenever some Iron John cretin writes a manifesto ‘in the name of’ all men, I’d much rather tell him to his face that he’s full of shit than desert the en-male gender and leave him thinking he speaks for all those who remain. But … that is the commonest reason people cite for gender migration: they’re sick of gender-political figureheads and pretentious Mystical Renaissance gurus claiming to represent them. And sick of being libeled for real and imagined gender crimes. If all men are violent, selfish, dominating, hierarchical… what can you do except slit your wrists, or from male to imale or asex? If all women are weak, passive, irrational victims—…”

Tangential digression to the tangential digression to the digression over.

All of this is to say, that the “trans” in transhuman is from the Latin, meaning across or beyond. It is the ‘trans’ition from human to posthuman. It is the transitory state, not the end goal.

Logos: Speech, reason, narrative, explanation. The word “word” in John 1:1 is translated from the Greek λόγος. With this understanding, the world/universe/cosmos spoke itself into being. It is from this verse (and in this sense - as I am referencing it above), that the title of Neal Stephenson’s amazing article/book, In the beginning was the Command Line is taken.

First, let’s note that Zuckerberg/Facebook/Meta didn’t coin the term “metaverse”. He/they straight-up stole it from Neuromancer. Second, let us never forget that Zuck built his fortune and empire on exploiting user data (and likely with US intel community funding and guidance.*)

*Though I can’t prove this, I have credible (to me) witnesses with first- and second-hand knowledge that the US Intelligence Community directly funded the ‘wide’ launch of “The Facebook” (which later became Facebook"). There’s plenty of well-documented, plausible speculation out there. Even without taking my second/third-hand account of this, anyone should find this very plausible, if not extremely probable given the evolution of Facebook/Meta over the years. Never forget the origin: Zuckerberg trying to sell the PII of his Harvard classmates (the “dumb fucks”), to include their social security numbers. Vibe shift or not, based-4th-of-july-zuck or not, BJJ or not, open-sourced Llama or not, Zuck and his efforts have meaningfully eroded freedom in the United States (and the entire online world) for the average citizen. He has been building the panopticon to the direct detriment of every user.

Fuck Zuck.

Of course, there is a diverse ecosystem of accelerationist thought. The various */acc schools diverge on what direction things are moving towards and whether that is good or not, what getting to the ‘end point’ means, and how disruptive or gentle the transition from here to there will be. And then, on top of such ontological and epistemic considerations, one needs (in order to be deemed the “correct” kind of */acc) to layer on economic and political ideology. Most of these are retarded and gay (proof of life that I am a human and that this was not written by AI).

See Nick Land’s Meltdown. This stands in stark contrast to Marc Adreeson’s or Beff Jezos’s understanding of the technocapital machine (as referenced in footnote #16). Once again, (ironically) the language of the Marxian-lineage, poststructuralists is being re-deterritorialized. Yes, Marc and Beff and others are likely unaware of the critical theory heritage of some of the terminology they use. But, as if Reality were a vindictive bitch with a sense of irony, she allows them to apply the very techniques of postmodern critique to do again to the same terms of language that the postmodern critical theorists did in the first place. Is this reterritorialization? Perhaps. Perhaps though, it is deterritorializing the signs that supposed semioticians de- and re-territorialized decades ago into an ossifying framework of masturbatory linguistic games.

Consistent footnote readers (and I have to imagine most readers who’ve anyone who’s read to this point) are well familiar with LW by now. In any case, the purpose of this footnote is to point out that the LW of the 20-teens is not the LW of today. And if you are not yet indoctrinated into LW, I’d recommend that you just ignore current stuff and start by working through the sequences (alternatively, you can find them collected here - they are also available digitally on Amazon under the title From AI to Zombies should you want to pay for them or get them on your kindle) or, if you’re the particular kind of nerd (millennial) who likes Harry Potter, then you might try Harry Potter and the Methods of Rationality (audiobook version is available free [also anywhere you get podcasts] and excellently done [after the first few chapters] thanks to

/ EneaszWrites).See footnote #2 for some discussion of this community and the naming terminology.

Short for existential risk. X-risks potentially threaten our current civilization, humanity as a whole, or even all life on Earth. X-risks are those things which could potentially snuff out the light of consciousness.

Examples include all-out nuclear war, bioweapons, a massive object colliding with the earth (dinosaur-bbq-style), many other things with higher or lower likelihoods and, of particular relevancy, artificial intelligence (or artificial general intelligence [or artificial super intelligence - the terminology keeps evolving to keep ahead of the MBAs stealing it and diluting the intended meaning]).

Scott Alexander is a pseudonymous name. A New York Times writer decided to publish an article on Scott and SSC, but intended to de-anonymize/doxx Scott. This was a whole big ordeal. In any case, because of this, Scott closed down SSC. Here’s his statement on that. He later opened a new blog here on substack,

. Here is his statement there, from after the NYT article was eventually published. There are plenty of articles, blog posts, and forum threads digging into all the drama of this episode if you want more.In any case, this is why you see SSC/ACX referring to Scott’s blog(s). An interesting side note: “Slate Star Codex: is an anagram (well, almost - it’s missing an “n”) of “Scott Alexander”. The SSC site banner/header had an “N” to balance things out. “Astral Codex Ten” is actually an anagram of “Scott Alexander”. Fun stuff.

I realize that this is a significant simplification, and am aware of the history with Robin Hanson and Overcoming Bias.

If that (“tpot”) doesn’t mean anything to you, don’t worry about it - it isn’t meant to. Feel free to read my substack post about it. If you don’t already know what it is, you’ll likely only become more confused. But, of course, even things that were intended to be illegible gain legibility, often in a way that seems ‘wrong’ to those who previously gatekept the meaning. I say this because I’ve been seeing “tpot” mentioned all over twitter (“X”) lately, even in groups that seem very far afield of tpot. So, whatever.

To be clear, “soundness” means that an argument is both valid* and that it has no False premises.

*an argument is valid when (iff) it is not possible for the premise(s) to be True and the conclusion to be False.

Definitionally, if an argument is sound, then its conclusion is necessarily True. Despite this, many people will for various reasons refuse to embrace the conclusion of an argument that seems sound to them. To boldly and bravely accept the uncomfortable or distasteful conclusions of an sound argument (or at least one that appears to be so) is known as “biting the bullet”.

The three major schools of thought for how we ought to understand ethics are deontological, consequential, and virtue.

Deontological ethics refer to duty and obligations under some moral ruleset. For example, if God commands certain actions and forbids others, then you are following your ethical obligations so long as you follow those rules. Actions themselves are either morally good or bad depending on whether they adhere to or violate the set of rules. Many of the different deontological ethical systems vary on what that set of rules contains, and where it comes from.

Consequentialists judge the moral value of an action not on the action itself (as in deontology), but on the action’s consequence. If the action leads to a good outcome, it was a good action. As with deontology, there are many different varieties of consequentialism, the largest/most famous of which is utilitarianism. In utilitarianism, you measure the consequence of an action by its utility. What that utility is, and how you measure at societal/civilization scales (as opposed to the individual scale) lead to the many different types of utilitarianism.*

*It should come as no surprise that there is some terminological crossover between utilitariansism and machine learning/artificial intelligence research. The concept of an objective function heavily influences the Bay Area-Computer science-skewing-rationalists on both. Remember that “everything 👏 is 👏 monocausal 👏…”

Virtue ethics is what it says on the tin. Actions are good so long as they align with virtues, or bad if they align with vices. Different flavors of virtue ethics will have different lists of virtues and vices, what makes them so, and how to judge if an action is in alignment.

Quality-adjusted life years. QALYs are a way to try and make “well being” into a fungible measurable unit. Since not every year of life is as ‘good’ as any other (an extra year of life when bed-ridden and disastrously ill isn’t as good as a year of good health) QALYs account for this. In theory, any QALY is just as good as any other. QALYs are often, though very much not always, used of the utility objective - that thing that should be maximized or optimized for under total or average utilitarianism.

Hyperreality is especially aggressive around AI terminology, seeking to consume the territory and replace it with a progressively shittier map. The speed with which the Nothing comes after AI concepts is straight Society of the Spectacle insanity.

Dropping the jargon, this is to say that each time the rat/EA/AI community develops some technical terminology to describe a very particular phenomenon, it is soon destroyed as the MBAs chase in and rebrand their latest slop with the latest cool-sounding techy terms. This is how we got the AI → AGI → ASI pathway as soon as advertisers learned that any company or product in “digital” probably used some degree of machine learning, so it’s “AI”! The same thing happened with the terms that the x-risk folk used. AI “Safety” went from being about preventing human extinction to how well lobotamized your LLM was to never do a racism or say something possibly insensitive. “Alignment” went from avoiding the universe being turned into paperclips to ensuring your RLHFed you model hard enough to ensure it would adhere to the party line. Thus,

suggested using:If you somehow don’t already know what this is, don’t go looking for it. This is a legitimate case an infohazard where ignorance = bliss. But if you don’t know, let me explain the inversion - the totalizing urgency of AI x-risk combined with a strict utilitarian ethic shakes out to a black & white, “you’re either with us or against us” kind of mentality with literally the highest possible stakes. If you fail in your mission to prevent AI before solving “alignment”, then that’s it. Everybody dies. No more humans. The universe gets terraformed into the most boring possible configuration (i.e., not even the AI carries the light of consciousness into the future, it just paves paradise to put up infinite parking lots absent any cars).

If you didn’t do everything to prevent this, then you basically caused it to happen.

If you aren’t familiar with the FTX scandal and Sam Bankman-Fried, well, it’s a whole big thing. Here’s the short version:

A smart college kid who was attracted to EA and seemed poised to make buttloads of money upon graduation got courted and indoctrinated into EA by some of the leading thinkers I’ve already mentioned. They convinced him to go into finance so he could get disgustingly rich and then use all of that money to fund EA activities and improve the world. Afterall, the more you earn, the more you can give. What could be more effective that becoming a billionaire and then using all that money altruistically? Anyways, the guy, SBF, eventually opens a big crypto trading company (FTX) and donates hundres of millions to EA organizations and causes. Success! Until FTX collapses and all sorts of sordid details come out about unethical practices. Billions of dollars of value were wiped out. Many people were financially destroyed, and lives were ruined. All by the guy who was supposedly doing everything according to an ethical calculus or achieving the most good for the most people. Yikes.